- December 14, 2025

Search has changed. It is no longer about rankings or clicks but about whether systems understand and trust your information.

The Confidence in Search Systems Framework helps close that gap. It combines what we can control with what we can only influence and measures how confident systems are in our content.

It began as a way to organise my own thinking and grew into a full model for understanding visibility in the age of AI search.

The future of SEO is not traffic. It is confidence.

Download the whitepaper: The Confidence in Search Systems Framework

Organic traffic is falling. You can see it in your analytics. Your clients can see it in theirs. Every SEO is watching the line curve down and trying to work out why.

The truth is simple. Search itself has changed. What was once a predictable web of links and rankings has become a network of reasoning systems that learn, interpret and act. People no longer type short keywords. They ask questions in natural language. Machines no longer match results. They reason about meaning, intent and trust.

Yet most of the industry still measures success through the same old numbers: rankings, clicks and traffic. Those numbers no longer tell the full story. Visibility now happens inside AI summaries, knowledge panels and conversational responses that may never lead to a single click.

That is the accountability gap. Work is being done. Influence is growing. But the evidence is missing.

The Confidence in Search Systems Framework was built to close that gap. It gives a way to understand how certainty, uncertainty and influence interact across modern search ecosystems. It helps teams measure and explain their impact when traditional metrics no longer apply.

For years, SEO followed a simple formula: optimise the content, earn the ranking, gain the traffic. Action led to outcome. Cause met effect.

AI-driven search has broken that formula. Large language models do not rank pages. They weigh probabilities. They do not return lists. They generate answers. They do not follow rules. They learn from patterns.

This shift has created three major challenges.

Visibility has not disappeared. It has moved. Brands can appear in summaries, graphs and conversational systems without generating a single click. Analytics cannot measure that influence, and the industry has yet to create a reliable way to report it.

Terms such as AI Overviews optimisation or generative experience optimisation are starting to appear, but they are tactics without a framework.

Before we can measure confidence, we need to redefine visibility.

Visibility is no longer about where you rank. It is about whether systems understand and trust you. It now exists in three layers.

The higher the layer, the greater the confidence that machines not only find your content but believe in it.

This is where modern SEO now lives: at the point where understanding meets trust.

The Confidence in Search Systems Framework starts with a simple idea. Success in modern search cannot be defined by rankings alone. It has to be understood as a balance between what we can control and what we can only influence.

Visibility is no longer a single outcome. It is a system of confidence.

At its core, the framework separates deterministic precision, which is the logical and testable side of SEO, from probabilistic reasoning, where results depend on interpretation, trust and context. By mapping both sides, we can see what works, why it works, and how confident we can be that it will keep working.

The framework sits on two intersecting dimensions that describe how we reason about cause and effect in search.

Deterministic and Probabilistic

Deterministic reasoning is the foundation of traditional SEO. It is based on logic and repeatability. Add a canonical tag and duplication resolves. Fix a redirect and authority flows correctly. These are predictable actions with measurable outcomes.

Probabilistic reasoning accepts that some outcomes cannot be fully controlled. When you improve E-E-A-T signals, strengthen topical authority or refine entity relationships, you are influencing how systems interpret your information. The results are guided by probability rather than certainty.

Causation and Correlation

Causation describes direct influence. You know why something happened because the link between action and outcome is clear.

Correlation describes observed patterns. You can see that something appears to work, but you cannot fully explain why.

When you cross these two dimensions, you create four quadrants. Each one represents a different type of reasoning and a different kind of confidence.

1, Deterministic + Causation = The Engineering Zone

This is where technical SEO lives. It covers crawlability, canonicalisation, redirects, schema validation and site performance. You can test, prove and repeat the outcomes with confidence.

Examples:

• Fixing 404 errors

• Implementing redirects

• Validating structured data

• Resolving duplicate content

2, Deterministic + Correlation = The Pattern Zone

This is where repeatable patterns appear. These are tactics that consistently work even if we cannot pinpoint a single direct cause.

Examples:

• Improving Core Web Vitals

• Optimising internal linking

• Adjusting content length

• Enhancing page speed

3, Probabilistic + Causation = The Strategic Zone

This is the space for influence. It includes authority, trust, topical depth and contextual relationships. You can create strong conditions for success, but the system’s interpretation always introduces some uncertainty.

Examples:

• Building topical authority

• Strengthening E-E-A-T signals

• Optimising entity relationships

• Growing brand mentions

4, Probabilistic + Correlation = The Discovery Zone

This is the most exploratory space. It covers AI summaries, generative search experiences and emerging signals from assistants or agents. Here you are testing hypotheses and learning how systems interpret relevance.

Examples:

• Optimising for AI Overviews

• Testing conversational search

• Experimenting with agent-based discovery

• Exploring new platforms and surfaces

Every quadrant has value. The purpose of the framework is not to rank one above another but to identify where each activity belongs so it can be measured and explained correctly.

Traditional SEO reporting treats all work the same. Everything is rolled into traffic, impressions and conversions. This hides the fact that not all actions operate under the same logic. Some are precise and measurable. Others are interpretive and probabilistic.

By separating them into quadrants, you can report with clarity. You can show what you control, what you influence and what you are still learning. It builds trust with stakeholders because it replaces the illusion of certainty with transparent reasoning.

It also improves how effort is allocated. Deterministic work can be automated or benchmarked. Probabilistic work benefits from research and experimentation. The balance keeps operations stable while allowing space for innovation.

Every task within the framework receives a confidence value. This is not a vanity score. It is a practical indicator of how predictable or uncertain an outcome is.

High confidence means strong control and low uncertainty.

Medium confidence reflects influence that depends on context.

Low confidence shows exploration or high uncertainty.

Tracking these values over time reveals how predictable, adaptable or experimental your SEO operation is becoming.

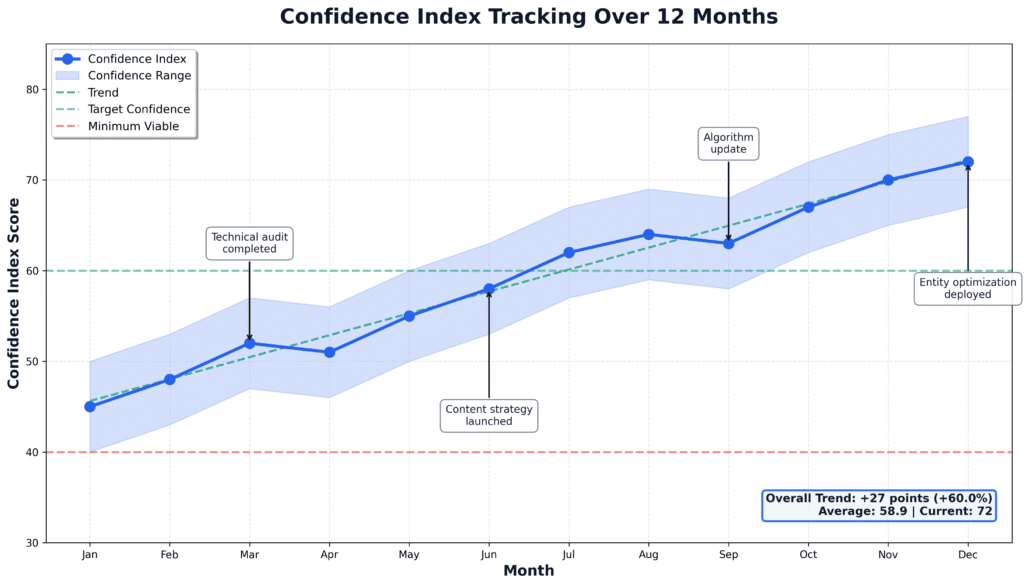

Over time, the pattern forms a Confidence Index. It combines the stability of deterministic work with the variability of probabilistic work. A positive trend shows predictability. A negative trend suggests volatility or innovation. A balanced trend shows a healthy mix of both.

The goal is not perfection. The goal is awareness. Confidence tells you whether the systems that interpret your work understand it, trust it and act on it as expected.

To understand why the Confidence in Search Systems Framework matters, we need to look at how AI search actually works. Modern discovery now operates across four connected layers: retrieval, representation, reasoning and action.

This is where information is collected from open sources, APIs or indexes. It is the layer that traditional SEO still focuses on. Crawlability, accessibility and structured data are all about retrieval.

It is the foundation of visibility. If a system cannot reach your content, it cannot use it. But retrieval alone is no longer enough. It is only the starting point.

This is the process of converting information into embeddings or vector representations. It is how machines understand meaning rather than just words.

At this stage, consistency becomes critical. If your brand, product or service is described differently across sources, the system’s confidence in what you represent begins to break down.

Representation optimisation means improving the clarity of meaning. It is about:

• Using structured data correctly and consistently

• Aligning entity names and descriptions across pages

• Ensuring factual accuracy

• Strengthening contextual links between topics

When representation is strong, the system understands your content as a coherent whole rather than as disconnected pieces.

This is where AI systems form conclusions. They connect vectors, weigh probabilities and decide which information to surface.

At this stage, confidence becomes central. The model effectively asks, “How likely is this to be accurate?” and “How often does this entity appear in relevant and trusted contexts?”

Reasoning confidence can be strengthened by:

• Building consistent topical authority

• Earning credible mentions across trusted sites

• Reducing conflicting information

• Maintaining freshness and accuracy

Reasoning confidence is the difference between being retrieved and being represented. It determines whether a system chooses your data when forming an answer.

This is the newest and most transformative layer. Assistants and agents can now act on information. They can make recommendations, perform bookings or generate summaries on behalf of users.

To be part of that process, your data must be machine-actionable. It must be structured, retrievable and trustworthy enough for systems to use autonomously.

Action confidence depends on:

• Accessible APIs or data feeds

• Clear content licensing and permissions

• Reliable pricing, availability or specification data

• Transparent source attribution

When a system can act confidently on your data, it can integrate it directly into the user journey without a click ever taking place.

The further you move through these layers, the less direct control you have. Retrieval is deterministic. Representation and reasoning are probabilistic. Action often becomes fully autonomous.

This is why SEO now feels less predictable. The systems we optimise for are no longer following rules. They are learning patterns and weighing probabilities.

The Confidence in Search Systems Framework mirrors that reality. It gives you a structured way to measure how much control, influence or uncertainty exists across every layer.

Every activity within the framework contributes to a measurable trend called the Confidence Index. It combines what can be proven with what can only be influenced.

A positive index shows a predictable environment.

A negative index shows volatility or innovation.

A balanced index shows adaptability and steady learning.

It is not a truth score. It is a directional signal. Over time, it reveals how stable or experimental your visibility has become.

The framework matters because it gives SEO professionals and organisations a clear, honest way to communicate.

Organic traffic will continue to decline. AI-driven discovery will continue to grow. The gap between what we measure and what truly drives visibility will widen.

The question is not whether this change will happen. It already has. The real question is whether your organisation has a framework for measuring visibility in a world that values trust and confidence above all else.

The Confidence in Search Systems Framework provides that structure. It turns uncertainty into something measurable. It replaces guesswork with reasoning. And it helps teams prove influence even when traditional metrics no longer tell the full story.

The future of search is not about chasing traffic. It is about earning confidence.

I never set out to build a framework. I just wanted to make sense of what I was seeing.

It started as a way to organise my own thinking. I was trying to understand why some SEO work felt predictable while other work felt like influence rather than control. Then one day it clicked. Every idea, every tactic, every action could be grouped as deterministic or probabilistic, a distinction that Mike King has articulated brilliantly in his recent work.

That was the lightbulb moment.

Later, causation and correlation came into it because they made the picture clearer. Those terms are familiar to anyone who works in data or SEO, and they helped show that not everything we observe can be proven.

The Confidence Index came next, when I started thinking about how to explain all of this to stakeholders. How could I show that what we do still has structure and value, even when visibility no longer means traffic?

At that point it stopped being a notebook idea and became something I knew I had to share. What began as a personal framework turned into a full model that connects human reasoning with machine reasoning.

I built it to help myself think more clearly about search. I’m sharing it now in the hope it helps others do the same.

The complete Confidence in Search Systems Framework whitepaper explores the model in full, including measurement methods, scoring examples and ways to gain buy-in inside your organisation.

You can read or download it here: The Confidence in Search Systems Framework

Acknowledgement

Special thanks and credit to Mike King, whose research and writing around information retrieval, probabilistic SEO, and modern search reasoning have helped influence and inspire parts of this framework. His work has been instrumental in shaping how I’ve approached the relationship between confidence, understanding, and visibility in intelligent systems.

Comments: