- December 23, 2025

Redirect mapping has always been one of those tedious but necessary parts of technical SEO. Whether it’s a site migration, a rebrand, or restructuring URLs, ensuring that old pages correctly point to their new equivalents goes without saying. The standard methods I’ve relied on for years are fuzzy matching, exact URL pattern recognition, and manual intervention for the straglers.

Lately, I have been experimenting with something different using embeddings for redirect mapping. It is a novel approach, but early results suggest it is worth further exploration.

Traditional redirect mapping is often a blend of automation and manual checking. The usual techniques include:

While these methods work, they often fall short in edge cases where URLs have changed significantly. This is where embeddings come in. Instead of comparing URLs based purely on text similarity, embeddings capture the contextual meaning of the URL itself.

This is useful when:

By leveraging Ollama and the Llama3 model, I have been running tests that compare embeddings against traditional fuzzy matching, and the results so far are promising.

Embeddings convert text (in this case, URLs) into high-dimensional vectors, allowing comparisons based on meaning rather than just direct character similarity.

For redirect mapping, this means:

The higher the similarity score, the more likely the two URLs are contextually related. This method doesn’t just look at surface-level text matches but instead assesses whether the two URLs represent similar intent.

For example:

Old URL: products/blue-widgets-2023

New URL: /shop/widgets/blue-collection

A traditional fuzzy match might not score these URLs highly, but embeddings can detect that they both relate to blue widgets and suggest a strong match.

I have been running this in parallel with traditional fuzzy matching to see how they compare. The early takeaway? Embeddings and fuzzy matching work well together rather than replacing one another.

In my testing, embeddings alone didn’t always provide better results than fuzzy matching, but when combined, they helped reduce false positives and improve accuracy.

For sites with thousands or even millions of URLs, handling redirects manually is not an option. Embeddings offer a way to automate more of the process without relying solely on fuzzy text matching.

The key takeaway is that this approach isn’t perfect yet, but it’s worth exploring. I can already see potential in cases where:

This is just the start of testing, but I expect embeddings to become another tool in the SEO migration playbook.

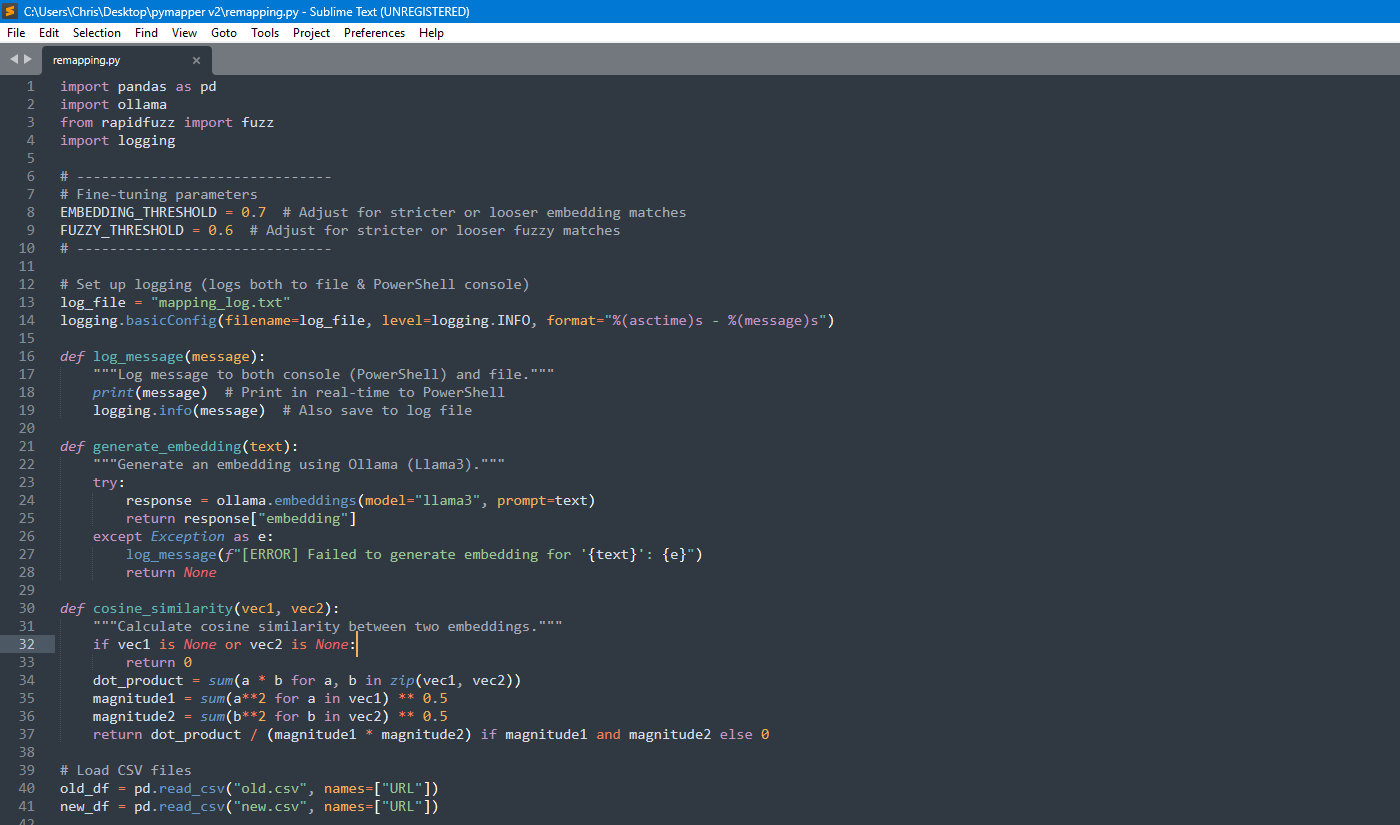

I won’t go into all the details, but the Python script I’ve been refining combines Ollama (Llama3 embeddings) with fuzzy matching. It:

This approach is still in early testing, but so far, the results are on par with fuzzy matching, with the potential to improve over time. The next step is refining thresholds and testing on different migration cases to see where embeddings outperform traditional methods.

Test Script Here: https://chrisleverseo.com/forum/t/embeddings-for-redirect-mapping-using-ollama-llama3-embeddings-with-fuzzy-matching.136/

I’m not saying embeddings will replace traditional redirect mapping methods just yet. But the potential is there. Right now, this approach works best when combined with fuzzy matching rather than as a standalone method. I need to test more.

If embeddings can reduce the manual work involved in site migrations (just like fuzzy did), it’s something worth further testing. As models improve and embeddings become more sophisticated, I wouldn’t be surprised if SEO tooling starts integrating this approach natively.

For now, I’ll keep experimenting, refining, and seeing how embeddings can be applied to more complex SEO challenges. If you’re running large-scale migrations and want to test new approaches, this is definitely something to explore.

I’ll wrap this up by saying this round of experimenting was focused purely on URLs. In a real-world migration, I’d normally pull in other attributes like page titles, headings, SKUs, breadcrumb paths, and any other common identifiers to improve accuracy. Before the tech bros start getting their knickers in a twist, I know there’s more to it, but this was a first pass at seeing what embeddings could do on their own.

Comments: