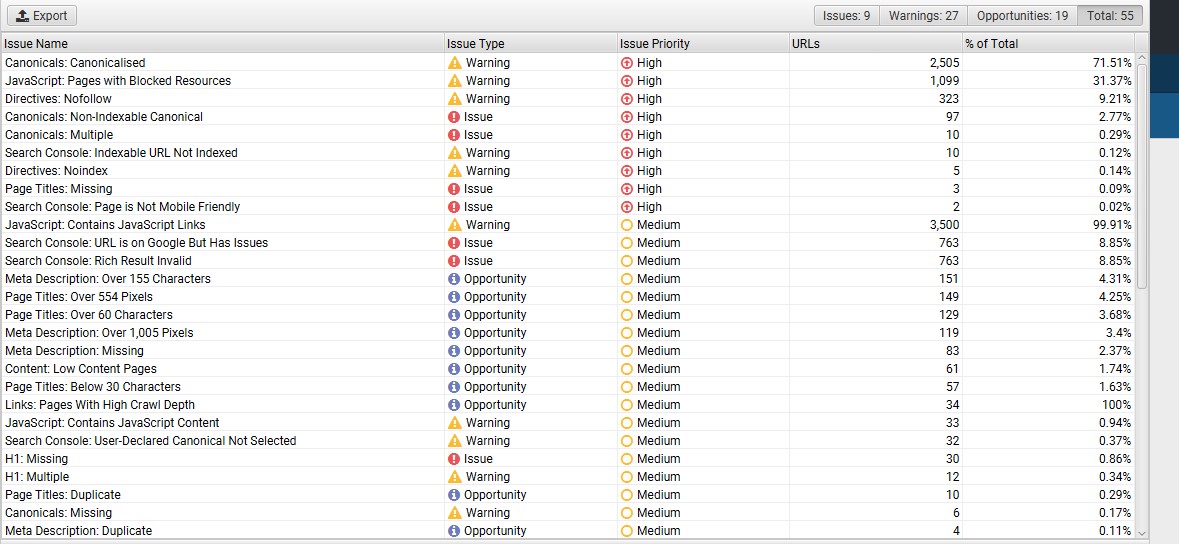

With the recent release of Screaming Frog version 17 they have included a new issues summary report and summary export. This will become a very useful tool for up and coming SEOs to quickly obtain a snapshot of a site crawling and indexing health.

Below is an example issues summary export. Lot's of useful information in that data. There even tips on how to fix that you can use in your tickets to developers and clients.

Below is an example issues summary export. Lot's of useful information in that data. There even tips on how to fix that you can use in your tickets to developers and clients.

| Issue Name | Issue Type | Issue Priority | URLs | % of Total | Description | How To Fix |

| Canonicals: Canonicalised | Warning | High | 2505 | 71.51 | Pages that have a canonical to a different URL. The URL is 'canonicalised' to another location. This means the search engines are being instructed to not index the page, and the indexing and linking properties should be consolidated to the URL in the canonical. | These URLs should be reviewed carefully to ensure the indexing and link signals are being consolidated to the correct URL. In a perfect world, a website wouldn't need to canonicalise any URLs as only canonical versions would be linked to internally on a website, but often they are required due to various circumstances outside of control, and to prevent duplicate content. Update internal links to canonical versions of URLs where possible. |

| JavaScript: Pages with Blocked Resources | Warning | High | 1099 | 31.37 | Pages with resources (such as images, JavaScript and CSS) that are blocked by robots.txt. This filter will only populate when JavaScript rendering is enabled (blocked resources will appear under 'Blocked by Robots.txt' in default 'text only' crawl mode). This can be an issue as the search engines might not be able to access critical resources to be able to render pages accurately. | Update the robots.txt to allow all critical resources to be crawled and used for rendering of the websites content. Resources that are not critical (e.g. Google Maps embed) can be ignored. |

| Directives: Nofollow | Warning | High | 323 | 9.21 | URLs containing a 'nofollow' directive in either a robots meta tag or X-Robots-Tag in the HTTP header. This is a 'hint' which tells the search engines not to follow any links on the page for crawling. This is generally used by mistake in combination with 'noindex', when there is no need to include this directive as it stops 'PageRank' from being passed onwards. To crawl pages with a nofollow directive within the SEO Spider, enable 'Follow Internal Nofollow' via 'Config > Spider'. | URLs with a 'nofollow' should be reviewed carefully to ensure that links shouldn't be crawled and PageRank shouldn't be passed on. If outlinks should be crawled and PageRank should be passed onwards, then the 'nofollow' directive should be removed. |

| Canonicals: Non-Indexable Canonical | Issue | High | 97 | 2.77 | Pages with a canonical URL that is non-indexable. This will include canonicals which are blocked by robots.txt, no response, redirect (3XX), client error (4XX), server error (5XX), are 'noindex' or 'canonicalised' themselves. This means the search engines are being instructed to consolidate indexing and link signals to a non-indexable page, which often leads to them ignoring the canonical, but may also lead to unpredictability in indexing and ranking. Export pages, their canonicals and status codes via 'Reports > Canonicals > Non-Indexable Canonicals'. | Ensure canonical URLs are to accurate indexable pages to avoid them being ignored by search engines, and any potential indexing or ranking unpredictability. |

| Canonicals: Multiple | Issue | High | 10 | 0.29 | Pages with multiple canonicals set for a URL (either multiple link elements, HTTP header, or both combined). This can lead to unpredictability, as there should only be a single canonical URL set by a single implementation (link element, or HTTP header) for a page. | Specify a single canonical URL using a single approach (link element, or HTTP header) for every page to avoid any potential mix ups. |

| Search Console: Indexable URL Not Indexed | Warning | High | 10 | 0.12 | URLs found in the crawl that are indexable, but are not indexed by Google and won?t appear in the search results. This can include URLs that are unknown to Google, or those that have been discovered or crawled but not indexed, and more. | Review URLs not indexed by Google and carefully consider whether they are important. For those that are important and should be indexed, review their 'coverage' description and how they could be improved to be crawled, indexed and ranked. |

| Directives: Noindex | Warning | High | 5 | 0.14 | URLs containing a 'noindex' directive in either a robots meta tag or X-Robots-Tag in the HTTP header. This instructs the search engines not to index the page. The page will still be crawled (to see the directive), but it will then be dropped from the index. | URLs with a 'noindex' should be reviewed carefully to ensure they are correct and shouldn't be indexed. If these pages should be indexed, then the 'noindex' directive should be removed. |

| Page Titles: Missing | Issue | High | 3 | 0.09 | Pages which have a missing page title element, the content is empty, or has a whitespace. Page titles are read and used by both users and the search engines to understand what a page is about. They are important for SEO as page titles are used in rankings, and vital for user experience, as they are displayed in browsers, search engine results and on social networks. | It's essential to write concise, descriptive and unique page titles on every indexable URL to help users, and enable search engines to score and rank the page for relevant search queries. |

| Search Console: Page is Not Mobile Friendly | Issue | High | 2 | 0.02 | URLs that have usability issues on mobile devices. | Review the 'Mobile Usability Issues' column and fix any mobile usability errors found by Google to ensure the page is friendly for users and is rewarded in ranking in the Google search results. |

| JavaScript: Contains JavaScript Links | Warning | Medium | 3500 | 99.91 | Pages that contain hyperlinks that are only discovered in the rendered HTML after JavaScript execution. These hyperlinks are not in the raw HTML. | While Google is able to render pages and see client-side only links, consider including important links server side in the raw HTML. |

| Search Console: URL is on Google But Has Issues | Issue | Medium | 763 | 8.85 | URLs that have been indexed and can appear in Google Search results, but there are some problems with mobile usability, AMP or Rich results that might mean it doesn?t appear in an optimal way. | Review URLs and use the 'Page is Not Mobile Friendly', 'AMP URL Invalid' and 'Rich Result Invalid' filters to segment by issue type. Fix any mobile usability, AMP or Rich results errors as necessary. |

| Search Console: Rich Result Invalid | Issue | Medium | 763 | 8.85 | URLs that have an error with one or more rich result enhancements that will prevent the rich result from showing in the Google search results. | Review the 'Rich Results Type Errors' column to see which Google features have invalid items. Export specific errors discovered by using the "Bulk Export > URL Inspection > Rich Results" export. Fix all errors to ensure rich results show in the Google search results. |

| Meta Description: Over 155 Characters | Opportunity | Medium | 151 | 4.31 | Pages which have meta descriptions over the configured limit. Characters over this limit might be truncated in Google's search results. | Write concise meta descriptions to ensure important words are not truncated in the search results, and not visible to users. |

| Page Titles: Over 554 Pixels | Opportunity | Medium | 149 | 4.25 | Pages which have page titles over Google's estimated pixel length limit for titles in search results. Google snippet length is actually based upon pixels limits, rather than a character length. The SEO Spider tries to match the latest pixel truncation points in the SERPs, but it is an approximation and Google adjusts them frequently. | Write concise page titles to ensure important words are not truncated in the search results, not visible to users and potentially weighted less in scoring. |

| Page Titles: Over 60 Characters | Opportunity | Medium | 129 | 3.68 | Pages which have page titles that exceed the configured limit. Characters over this limit might be truncated in Google's search results and carry less weight in scoring. | Write concise page titles to ensure important words are not truncated in the search results, not visible to users and potentially weighted less in scoring. |

| Meta Description: Over 1,005 Pixels | Opportunity | Medium | 119 | 3.4 | Pages which have meta descriptions over Google's estimated pixel length limit for snippets. Google snippet length is actually based upon pixels limits, rather than a character length. The SEO Spider tries to match the latest pixel truncation points in the SERPs, but it is an approximation and Google adjusts them frequently. | Write concise meta descriptions to ensure important words are not truncated in the search results, and not visible to users. |

| Meta Description: Missing | Opportunity | Medium | 83 | 2.37 | Pages which have a missing meta description, the content is empty or has a whitespace. This is a missed opportunity to communicate the benefits of your product or service and influence click through rates for important URLs. | It's important to write unique and descriptive meta descriptions on key pages to communicate the purpose of the page to users, and entice them to click on your result over the competition. It can also mean Google use this description for snippets in the search results for some queries, rather than make up their own based upon the content of the page. |

| Content: Low Content Pages | Opportunity | Medium | 61 | 1.74 | Pages with a word count that is below the default 200 words. The word count is based upon the content area settings used in the analysis which can be configured via 'Config > Content > Area'. There isn't a minimum word count for pages in reality, but the search engines do require descriptive text to understand the purpose of a page. This filter should only be used as a rough guide to help identify pages that might be improved by adding more descriptive content in the context of the website and page's purpose. Some websites, such as ecommerce, will naturally have lower word counts, which can be acceptable if a products details can be communicated efficiently. | Consider including additional descriptive content to help the user and search engines better understand the page. |

| Page Titles: Below 30 Characters | Opportunity | Medium | 57 | 1.63 | Pages which have page titles under the configured limit. This isn't necessarily an issue, but it does indicate there might be room to target additional keywords or communicate your USPs. | Consider updating the page title to take advantage of the space left to include additional target keywords or USPs. |

| JavaScript: Contains JavaScript Content | Warning | Medium | 33 | 0.94 | Pages that contain body text that's only discovered in the rendered HTML after JavaScript execution. | While Google is able to render pages and see client-side only content, consider including important content server side in the raw HTML. |

| Search Console: User-Declared Canonical Not Selected | Warning | Medium | 32 | 0.37 | Google has chosen to index a different URL to the one declared by the user in the HTML or HTTP Header. Canonicals are hints rather than directives. Sometimes Google does a great job of selecting the canonical version, other times it?s less than ideal. | Review which URLs Google has chosen to index instead of the canonical URL declared. Consider whether this is accurate and if any consolidation, updating of canonicals, internal links or XML Sitemaps are necessary. |

| H1: Missing | Issue | Medium | 30 | 0.86 | Pages which have a missing <h1>, the content is empty or has a whitespace. The <h1> should describe the main title and purpose of the page and are considered to be one of the stronger on-page ranking signals. | Ensure important pages have concise, descriptive and unique headings to help users, and enable search engines to score and rank the page for relevant search queries. |

| H1: Multiple | Warning | Medium | 12 | 0.34 | Pages which have multiple <h1>s. While this is not strictly an issue because HTML5 standards allow multiple <h1>s on a page, there are some problems with this modern approach in terms of usability. It's advised to use heading rank (h1-h6) to convey document structure. The classic HTML4 standard defines there should only be a single <h1> per page, and this is still generally recommended for users and SEO. | Consider updating the HTML to include a single <h1> on each page, and utilising the full heading rank between (h2 - h6) for additional headings. |

| Page Titles: Duplicate | Opportunity | Medium | 10 | 0.29 | Pages which have duplicate page titles. It's really important to have distinct and unique page titles for every page. If every page has the same page title, then it can make it more challenging for users and the search engines to understand one page from another. | Update duplicate page titles as necessary, so each page contains a unique and descriptive title for users and search engines. If these are duplicate pages, then fix the duplicated pages by linking to a single version, and redirect or use canonicals where appropriate. |

| Canonicals: Missing | Warning | Medium | 6 | 0.17 | Pages that have no canonical URL present either as a link element, or via HTTP header. If a page doesn't indicate a canonical URL, Google will identify what they think is the best version or URL. This can lead to ranking unpredictability when there are multiple versions discovered, and hence generally all URLs should specify a canonical version | Specify a canonical URL for every page to avoid any potential ranking unpredictability if multiple versions of the same page are discovered on different URLs. |

| Meta Description: Duplicate | Opportunity | Medium | 4 | 0.11 | Pages which have duplicate meta descriptions. It's really important to have distinct and unique meta descriptions that communicate the benefits and purpose of each page. If they are duplicate or irrelevant, then they will be ignored by search engines in their snippets. | Update duplicate meta descriptions as necessary, so important pages contain a unique and descriptive title for users and search engines. If these are duplicate pages, then fix the duplicated pages by linking to a single version, and redirect or use canonicals where appropriate. |

| Page Titles: Below 200 Pixels | Opportunity | Medium | 3 | 0.09 | Pages which have page titles much shorter than Google's estimated pixel length limit. This isn't necessarily an issue, but it does indicate there might be room to target additional keywords or communicate your USPs. | Consider updating the page title to take advantage of the space left to include additional target keywords or USPs. |

| JavaScript: H1 Updated by JavaScript | Warning | Medium | 2 | 0.06 | Pages that have h1s that are modified by JavaScript. This means the h1 in the raw HTML is different to the h1 in the rendered HTML. | While Google is able to render pages and see client-side only content, consider including important content server side in the raw HTML. |

| Meta Description: Below 70 Characters | Opportunity | Medium | 1 | 0.03 | Pages which have meta descriptions below the configured limit. This isn't strictly an issue, but an opportunity. There is additional room to communicate benefits, USPs or call to actions. | Consider updating the meta description to take advantage of the space left to include additional benefits, USPs or call to actions to improve click through rates (CTR). |

| Meta Description: Below 400 Pixels | Opportunity | Medium | 1 | 0.03 | Pages which have meta descriptions much shorter than Google's estimated pixel length limit. This isn't necessarily an issue, but it does indicate there might be room to communicate benefits, USPs or call to actions. | Consider updating the meta description to take advantage of the space left to include additional benefits, USPs or call to actions to improve click through rates (CTR). |

| Search Console: No Search Analytics Data | Warning | Low | 4125 | 47.83 | URLs that are not returning impressions in the performance report in Google Search Console. URLs either didn?t receive any impressions, or perhaps the URLs in the crawl are just different to those in the GSC profile selected. | Review URLs with no search analytics data and consider whether this is expected, or if they could be improved. Please select the correct property in GSC for accurate data. |

| Search Console: Non-Indexable with Search Analytics Data | Warning | Low | 3559 | 41.26 | URLs that are classed as non-indexable, but have recorded impressions in the performance report in Google Search Console. | Review non-indexable URLs with search analytics data and consider whether this is expected, if Google is ignoring 'hints' such as canonicals, or whether pages should be made indexable. |

| Security: Missing Content-Security-Policy Header | Warning | Low | 3506 | 40.59 | URLs that are missing the Content-Security-Policy response header. This header allows a website to control which resources are loaded for a page. This policy can help guard against cross-site scripting (XSS) attacks that exploit the browser's trust of the content received from the server. The SEO Spider only checks for existence of the header, and does not interrogate the policies found within the header to determine whether they are well set-up for the website. This should be performed manually. | Set a strict Content-Security-Policy response header across all page to help mitigate cross site scripting (XSS) and data injection attacks. |

| Security: Missing X-Content-Type-Options Header | Warning | Low | 3506 | 40.59 | URLs that are missing the 'X-Content-Type-Options' response header with a 'nosniff' value. In the absence of a MIME type, browsers may 'sniff' to guess the content type to interpret it correctly for users. However, this can be exploited by attackers who can try and load malicious code, such as JavaScript via an image they have compromised. | To minimise security issues, the X-Content-Type-Options response header should be supplied and set to 'nosniff'. This instructs browsers to rely only on the Content-Type header and block anything that does not match accurately. This also means the content-type set needs to be accurate. |

| Security: Missing Secure Referrer-Policy Header | Warning | Low | 3506 | 40.59 | URLs missing 'no-referrer-when-downgrade', 'strict-origin-when-cross-origin', 'no-referrer' or 'strict-origin' policies in the Referrer-Policy header. When using HTTPS, it's important that the URLs do not leak in non-HTTPS requests. This can expose users to 'man in the middle' attacks, as anyone on the network can view them. | Consider setting a referrer policy of strict-origin-when-cross-origin. It retains much of the referrer's usefulness, while mitigating the risk of leaking data cross-origins. |

| Security: Unsafe Cross-Origin Links | Warning | Low | 3497 | 40.48 | URLs that link to external websites using the target="_blank" attribute (to open in a new tab), without using rel="noopener" (or rel="noreferrer") at the same time. Using target="_blank" alone leaves those pages exposed to both security and performance issues. The external links that contain the target="_blank" attribute by itself can be viewed in the 'outlinks' tab and 'target' column. They can be exported alongside the pages they are linked from via 'Bulk Export > Security > Unsafe Cross-Origin Links'. | The rel="noopener" link attribute should be used on any links that contain the target="_blank" attribute to avoid security and performance issues. |

| Security: Protocol-Relative Resource Links | Warning | Low | 3497 | 40.48 | URLs that load resources such as images, JavaScript and CSS using protocol-relative links. A protocol-relative link is simply a link to a URL without specifying the scheme (for example, //screamingfrog.co.uk). It helps save developers time from having to specify the protocol and lets the browser determine it based upon the current connection to the resource. However, this technique is now an anti-pattern with HTTPS everywhere, and can expose some sites to 'man in the middle' compromises and performance issues | Update any resource links to be absolute links including the scheme (HTTPS) to avoid security and performance issues. |

| URL: Parameters | Warning | Low | 2409 | 27.89 | URLs that include parameters such as '?' or '&'. This isn't an issue for Google or other search engines to crawl unless at significant scale, but it's recommended to limit the number of parameters in a URL which can be complicated for users, and can be a sign of low value-add URLs. | Where possible use a static URL structure without parameters for key indexable URLs. However, changing URLs is a big decision, and often it's not worth changing them for SEO purposes alone. If URLs are changed, then appropriate 301 redirects must be implemented. |

| URL: Uppercase | Warning | Low | 1351 | 15.64 | URLs that have uppercase characters within them. URLs are case sensitive, so as best practice generally URLs should be lowercase, to avoid any potential mix ups and duplicate URLs. | Ideally lowercase characters should be used for URLs only. However, changing URLs is a big decision, and often it's not worth changing them for SEO purposes alone. If URLs are changed, then appropriate 301 redirects must be implemented. |

| URL: Underscores | Opportunity | Low | 1115 | 12.91 | URLs with underscores, which are not always seen as word separators by search engines. | Ideally hyphens should be used as word separators, rather than underscores. However, changing URLs is a big decision, and often it's not worth changing them for SEO purposes alone. If URLs are changed, then appropriate 301 redirects must be implemented. |

| URL: Over 115 Characters | Opportunity | Low | 1001 | 11.59 | URLs that are more than the configured length. This is generally not an issue, however research has shown that users prefer shorter, concise URL strings. | Where possible use logical and concise URLs for users and search engines. However, changing URLs is a big decision, and often it's not worth changing them for SEO purposes alone. If URLs are changed, then appropriate 301 redirects must be implemented. |

| H2: Multiple | Warning | Low | 988 | 28.2 | Pages which have multiple <h2>s. This is not an issue as HTML standards allow multiple <h2>'s when used in a logical hierarchical heading structure. However, this filter can help you quickly scan to review if they are used appropriately. | Ensure <h2>s are used in a logical hierarchical heading structure, and update where appropriate utilising the full heading rank between (h3 - h6) for additional headings. |

| H2: Duplicate | Opportunity | Low | 876 | 25.01 | Pages which have duplicate <h2>s. It's important to have distinct, unique and useful pages. If every page has the same <h2>, then it can make it more challenging for users and the search engines to understand one page from another. | Update duplicate <h2>s as necessary, so important pages contain a unique and descriptive <h2> for users and search engines. If these are duplicate pages, then fix the duplicated pages by linking to a single version, and redirect or use canonicals where appropriate. |

| Search Console: URL is Not on Google | Warning | Low | 493 | 5.72 | URLs not indexed by Google and won?t appear in the search results. This filter can include non-indexable URLs (such as those that are ?noindex?) as well as Indexable URLs that are able to be indexed. It?s a catch all filter for anything not on Google according to the API. | Review URLs not indexed by Google and carefully consider whether they should or shouldn't be indexed. For those that should be indexed, review their 'coverage' description and how they could be improved to be indexed. |

| H1: Duplicate | Opportunity | Low | 69 | 1.97 | Pages which have duplicate <h1>s. It's important to have distinct, unique and useful main headings. If every page has the same <h1>, then it can make it more challenging for users and the search engines to understand one page from another. | Update duplicate <h1>s as necessary, so important pages contain a unique and descriptive <h1> for users and search engines. If these are duplicate pages, then fix the duplicated pages by linking to a single version, and redirect or use canonicals where appropriate. |

| URL: Non ASCII Characters | Warning | Low | 13 | 0.15 | URLs with characters outside of the ASCII character-set. Standards outline that URLs can only be sent using the ASCII character-set and some users may have difficulty with subtleties of characters outside this range. | URLs should be converted into a valid ASCII format, by encoding links to the URL with safe characters (made up of % followed by two hexadecimal digits). Today browsers and the search engines are largely able to transform URLs accurately. |

| URL: Multiple Slashes | Issue | Low | 10 | 0.12 | URLs that have multiple forward slashes in the path (example.com/page1//). This is generally by mistake and as best practice URLs should only have a single slash between sections of a path to avoid any potential mix ups and duplicate URLs within the string. This is excluding use within the protocol (https://). | Ideally only a single slash should be used for URLs. However, changing URLs is a big decision, and often it's not worth changing them for SEO purposes alone. If URLs are changed, then appropriate 301 redirects must be implemented. |

| H2: Missing | Warning | Low | 8 | 0.23 | Pages which have a missing <h2>, the content is empty or has a whitespace. The <h2> heading is often used to describe sections or topics within a document. They act as signposts for the user, and can help search engines understand the page. | Consider using logical and descriptive <h2>s on important pages that help the user and search engines better understand the page. |

| H2: Over 70 Characters | Opportunity | Low | 8 | 0.23 | Pages which have <h2>s over the configured limit. There is no hard limit for characters in an <h2>, however they should be clear and concise for users and long headings might be less helpful | Write concise <h2>s for users, including target keywords where natural for users - without keyword stuffing. |

| Security: Bad Content Type | Warning | Low | 3 | 0.03 | This indicates any URLs where the actual content type does not match the content type set in the header. It also identifies any invalid MIME types used. When the X-Content-Type-Options: nosniff response header is set by the server this is particularly important, as browsers rely on the content type header to correctly process the page. This can cause HTML web pages to be downloaded instead of being rendered when they are served with a MIME type other than text/html for example. | Analyse URLs identified with a bad content type, and set an accurate MIME type in the content-type header. |

| URL: Internal Search | Warning | Low | 2 | 0.02 | URLs that might be part of the websites internal search function. Google and other search engines recommend blocking internal search pages from being crawled to limit sometimes duplicate and low quality pages from being crawled and indexed. | Most internal site searches are built for users, rather than search engines who may needlessly crawl and index them. As best practise most search sections of a site should not be linked to internally, and should be disallowed in robots.txt. |

| URL: Contains Space | Issue | Low | 1 | 0.01 | URLs that contain a space. These are considered unsafe and could cause the link to be broken when sharing the URL. Hyphens should be used as word separators instead of spaces. | Ideally hyphens should be used as word separators, rather than spaces. However, changing URLs is a big decision. If URLs are changed, then appropriate 301 redirects must be implemented. |

| H1: Over 70 Characters | Opportunity | Low | 1 | 0.03 | Pages which have <h1>s over the configured length. There is no hard limit for characters in an <h1>, however they should be clear and concise for users and long headings might be less helpful | Write concise <h1>s for users, including target keywords where natural for users - without keyword stuffing. |